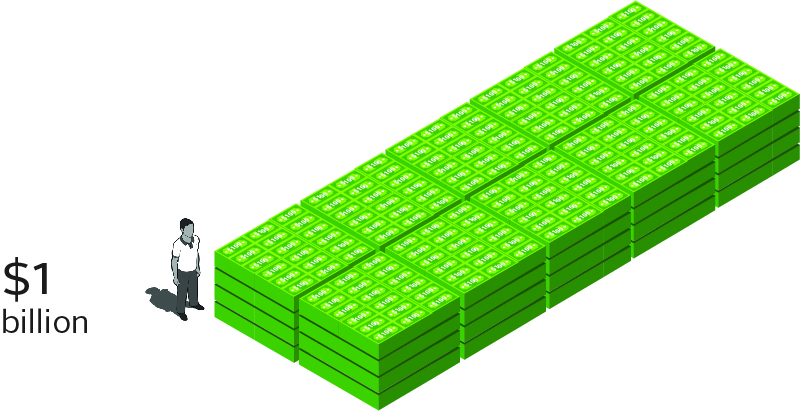

After ransomware attacks crippled public transportation systems, hospitals and city governments over the past two years, 2018 seems poised to be another wildly successful year for attackers who use this threat, which netted criminals more than $1 billion in 2016, according to the FBI. And a recent spate of ransomware infections impacting Boeing, the government of Atlanta and the Colorado Department of Transportation hasn’t done much to dispel this notion.

But don’t expect ransomware to be as menacing in 2018, said Ross Rustici, Cybereason’s Senior Director for Intelligence Services, who looked at some of the myths around ransomware in a webinar this week.

“Despite the fact that 2017 was a banner year for ransomware intrusions, if you look at data points going back to 2015, when ransomware has its zenith in infections, there’s been a steady decline since then,” he said, adding that this doesn’t mean ransomware is no longer a threat.

In this blog, Rustici debunks a few common ransomware myths, talks about what other threats attackers may use instead and explains why organizations still need to remain vigilant against ransomware.

MYTH: RANSOMWARE IS GROWING A TREND

REALITY: RANSOMWARE ATTACKS HAVE PEAKED

While Rustici labelled 2017 as an “abnormal year” for both ransomware delivery methods and the scale of attacks, 2018 doesn’t hold the same outlook. Looking at the number of ransomware families and infections over the past three years reveals that this threat has crested. He noted that in 2015, when ransomware attacks were at their peak , there were an estimated 350 ransomware families. In 2017, that number decreased to 170, a reduction of approximately 50 percent.

“That shrinkage continues in 2018, but not at the same rate,” he said. “When you are looking at the capabilities that are being baked in to new variants, we’re seeing a consolidation in ransomware.” From a defender’s perspective, this development is positive since it means they’ll be familiar with how the ransomware operates and won’t encounter new techniques, Rustici said.

As for ransomware infection rates, Rustici said that number is trending downwards after temporarily spiking in 2017. There was a high number of infections in 2015, a decline in 2016 and a slight uptick in the middle of 2017 due to NotPetya, BadRabbit and WannaCry and a decline in the end of 2017 that continued into 2018.

“We’ve hit the high water mark of ransomware variants from the technology side and also its widespread usage and infection rate,” Rustici said, noting that ransomware’s effectiveness means there will always be outlying examples like the Boeing and Atlanta government infections. “But in terms of overall spread and growth, [ransomware] is dropping into the same pool as banking Trojans and other malware that we all hate.”

MYTH: THE SECURITY COMMUNITY FAILED TO PROTECT COMPANIES FROM RANSOMWARE

REALITY: THE SECURITY COMMUNITY CONTAINED RANSOMWARE BY WORKING TOGETHER

The security community has addressed ransomware more forcefully compared to other malware. Rustici attributes this response to the industry’s realization that ransomware eclipses other malware as a major public nuisance.

“You’ve seen a groundswell response from the security industry’s overall response, whether it’s free utilities like RansomFree or researchers figuring out how to decrypt files so [victims] don’t have to pay the ransom,” he said.

The security community’s response to WannaCry and NotPetya particularly stood out to Rustici. Within 48 hours of both infections, hot fixes were available for people who were looking to inoculate themselves, not purchase security products. Years earlier the security community realized the danger posed by ransomware and saw collaboration as the only way to address it, he said.

“And on this rare occasion, they succeeded more often than not. There will always be variants coming out but as the security community keeps its attention on this and continues to try to combat ransomware, we’re getting a better handle on it compared to other malware,” he said.

MYTH: CRYPTOMINER ATTACKS ARE THE NEXT BIG THING

REALITY: CRYPTOMINER ATTACKS ARE NOT REPLACING RANSOMWARE ATTACKS

Don’t expect ransomware attacks to be replaced by a deluge of cryptominer attacks. Wild price fluxuations in the cryptocurrency may not justify the amount that’s required to earn a profit, Rustici said.

“Cryptominers are never going to reach the same level of [ransomware] from a deployment perspectives simply because the monetary payoff for these type of tools is tied to the cryptomarkets,” he said.

While one bitcoin is worth $20,000, for instance, cryptomining makes sense since the necessary work would yield a decent profit. But when bitcoin is trading below $10,000, there’s less of an economic incentive to engage in cryptominer attacks, Rustici said. Cryptominer attacks will be less of a sustained trend and more tied to the fluctuations of the cryptocurrency market.

“The money isn’t there as readily as some of the other things they could be doing with same time and effort,” he said.

MYTH: BAD USER BEHAVIOR EXPLAINS RANSOMWARE INFECTIONS

REALITY: RANSOMWARE DELIVERY METHODS HAVE EVOLVED

Downloading malicious attachments in spear phishing emails and visiting sketchy websites account for many ransomware infections, but bad users aren’t the only ones to blame for these attacks. There’s also been an evolution in how infections are delivered with attackers using more technical techniques like exploit kits.

“They’re using more sophisticated capabilities for delivery mechanisms that ignore the stupid user because as security awareness grows and endpoint protections get better the stupid user becomes a less reliable intrusion vector,” Rustici said.

Attackers started with drive-by downloads and increased the level delivery sophistication of to ensure that ransomware remained a profitable threat. In fact, Rustici pointed out that the number of spear phishing emails and infected webpages decreased last year, in relative terms, as they became less effective delivery methods.

Expect attackers to adopt more advanced delivery techniques in 2018 as less sophisticated actors stop using ransomware with the malware becoming more difficult to monetize, Rustici said.

WHAT THREAT COULD TAKE RANSOMWARE’S PLACE

Adversaries are likely to adopt a new technique as ransomware joining the ranks of adware, banking Trojans and the stable of other malware that attackers can use. But predicting the next great threat is challenging, Rustici said.

One possibility is data extortion, perhaps as an unintended consequence of the General Data Protection Regulation, he said. Companies that violate GDPR by exposing personal data on E.U. citizens risk hefty fines. Attackers may give companies the option of paying them to not publicly disclose the breach and pilfered data. Paying the attackers to keep quiet could cost companies less than the fine imposed by GDPR. Rustici noted that companies have already engaged in this behavior. Uber, for instance, paid attackers $130,000 in an attempt to conceal a breach.

Attackers only adopt techniques that earn them a profit, making organizations responsible for the success or failure of data extortion campaigns.

“It’s going to be up to private industries to decide if they want to pay and if this becomes a trend. Once [attackers] realize that there’s easy money to be made, they’re going to jump on the bandwagon,” Rustici said.

RANSOMWARE: DOWN BUT BY NO MEANS OUT

While better endpoint security and shifting economics may mean ransomware is no longer an attacker’s preferred threat, the malware shouldn’t be written off by organizations.

“This isn’t a victory lap. The security industry has done a good job of containing ransomware’s growth, but it’s still out there. We’re seeing a downward trend in its usage and the infections rate but it’s still in the hundred of of thousands. This isn’t by any means a solved problem. It’s just not growing as exponentially as we thought it would,” Rustici said.

Thanks to Cybereason for this article