Anyone in network security knows that it is a complicated and involved process. The clear goal is to prevent security breaches. How do you go about that though? There are so many schools of thought, methods, and configurations. Here are just a few examples:

- Cyber range training

- Cyber resilience

- Defense in depth

- Encryption

- Endpoint security

- Firewalls

- Inline security tools (IPS, WAF, TLS decryption, etc.)

- Out-of-band security tools (IDS, DLP, SIEM, etc.)

- Penetration testing

- Port scanning

- Security device testing

- Threat hunting

- Threat intelligence

- And the list goes on …

With so many different approaches and activities to focus on, it can become quite confusing. While several of these items stand out as high value, I want to focus on the approach of using inline security tools as part of your security architecture approach. Once you have a basic security architecture (firewalls and basic out-of-band security appliances like an intrusion detection system (IDS) and data loss prevention (DLP) solution) in place, an inline security tool approach can give you a lot of “bang for the security buck.” This architecture allows you to actively screen for threats in real-time and block a significant amount of these threats coming into your network. This immediately reduces your risk because you actively inspect traffic and eliminate or quarantine anything that appears suspicious.

At the same time, life is never a “bed of rose petals”, right? There are always some thorns. While there is a clear and high value benefit to those inline security tools (i.e. the ability to catch malware and other attacks at the entry into the network before they start their dirty work), there are always some areas of concern to watch out for. These concerns include: creating a single point of failure, complexity, additional costs, and alert fatigue. The trick here is to implement the right type of inline solution which will eliminate, or at least mitigate, those concerns.

So, what is a best practice for implementing inline security? There are three keys items to address in your plan:

- How to best insert the equipment into the network?

- The best method for data inspection

- The reliability of the solution

First, you need to decide how you are going to deploy your inline security tools. For instance, do you plan to insert every appliance directly into the path between your firewall and router? If so, what order do you plan to insert the devices into the network? Which devices are you planning to deploy, and what happens if you make any architectural changes?

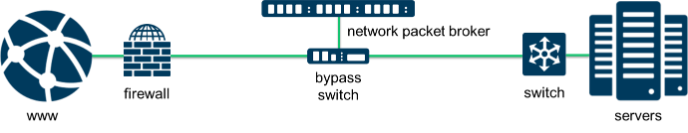

A first reaction to all of these questions is that this can be very overwhelming. You are correct. So, the first recommendation would be to maximize flexibility and eliminate complexity here. I would recommend implementing an external bypass switch and a network packet broker (NPB) first. As the figure below shows, the bypass switch is the fundamental equipment in the flow of network data. It will then shunt the data off to the NPB for analysis. However, with this configuration, should anything need to be changed from the NPB on (i.e. the security appliances), you don’t have to kill the network to make any changes. This makes your network design much simpler than trying to install two, three, four or more appliances directly inline, one after another. This one decision reduces an exorbitant amount of complexity and risk.

It is important to have an external bypass switch in the architecture to enable fail-over capability during upgrades. Certain inline security tools include an internal bypass switch. This becomes a problem when you want to replace the security tool, as you have to shut the network down. Even some software upgrades may cause the internal bypass a problem if the upgrade requires a reset. This is another network shut down. The simple solution is to use an external bypass and then you don’t have to worry about future upgrades.

The second consideration is how do you plan to inspect the data. If you did not follow the bypass and NPB scenario I just recommended, then you will be in for a nightmare scenario. The security appliances will need to be arranged in order of how the data will be inspected. For instance, does the data go to an intrusion prevention system (IPS) first, then onto a web application firewall (WAF), and then on to another device (possibly a unified threat management (UTM) solution or something). After that how do you plan to handle encrypted data? For instance, are you going to decrypt and re-encrypt after every security tool or are you going to try to decrypt once at the start of the chain and send the data all the way back to the front for re-encryption (and of course how does that work without going back through the whole chain again)? By the way, what is the transmission delay that you are adding to your data transit process with these decisions?

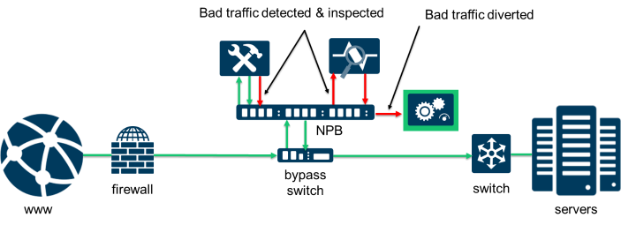

Hopefully you did select the bypass and NPB option. If so, then you still need to put some effort into this area, but it is easy compared to the previous discussion. As the next figure shows, once the NPB is in place, you simply connect all of the devices to the NPB and set up filter rules within the NPB for how to route the data. Setting up the filter rules sounds like it could be difficult, but it is not if you choose the right packet broker that has a graphical user interface (GUI) interface. This type of solution uses a drag and drop visual method to set up the filters.

What is especially beneficial to this solution is that the NPB supports serial data chaining. So, if data sent to the IPS is flagged as suspicious, it can then be sent to a WAF or UTM for further analysis to either quarantine the data, kill it, or clear the data as okay.

Another great feature of packet brokers is that they can perform data decryption. This lets you inspect encrypted data for malware, which is a considerable threat as over half of Internet data is now encrypted. If you can’t inspect the data, you have no idea what you just let into your network. With an integrated encryption function, this will typically save you time and money during the inspection process. It significantly reduces the complexity of dealing with encrypted data without the packet broker. If an external decryption appliance is desired, then the NPB can easily support that option as well. You simply connect the device to the NPB and create the filter rules.

The third area to focus on is the reliability of your solution. No one wants to implement an architecture that completely stops the flow of network data or causes the network intermittent transmission issues. This gets very complicated and expensive if you choose the non-NPB option here. For one thing, you will need redundant equipment (which means double the cost). You will also need load balancers and potentially dual routing capabilities.

With the bypass and NPB option, survivability is built in. The bypass switch and NPB both support heartbeat messaging. Should either device fail to get a return heartbeat, then it considers that device down and follows it’s failover practice. Even in a fail-over situation, the bypass switch or NPB will continue to send heartbeats to see if the failed device has come back online again. If so, the solution will revert back to regular operation. This helps create a self-healing architecture. Proper heartbeat algorithms will prevent a “tromboning” condition (should a faulty device fluctuate between being online and off-line).

The NPB also supports multiple survivability options. First, you can have full redundancy of all equipment (redundant bypass switches, redundant NPBs, and redundant security appliances). Second, you can deploy a high availability option for just the NPB, so that it has dual processors running. If one fails, the other will continue to process data. The third option is to use the NPB to support load balancing and n+1 survivability for your security tools. For instance, if you need 3 tools to process the data load, simply add a fourth tool. The NPB will split the load equally between all four devices. However, should any one of these devices fail, the NPB will divide the load across the remaining three devices. This provides a high level of reliability without the cost of full redundancy. You can also increase your network survivability by implementing n+2 survivability all the way to n+n, if you want.

You now have all the basic information that you need to start planning an inline security architecture. If you want more information on this topic, try reading this whitepaper.