Over the last couple of years, the growth of IP-based video communtications and IP videoconferencing has explodied due to the the substantial increase of work from remote locations.

Video communications are expected to be smooth. Network teams need to be prepared, as video brings several unique challenges. This article will explore key video requirements and monitoring strategies to ensure the technology meets user expectations.

Managing in Real Time

The primary challenge differentiating management of videoconferencing performance from other applications is the real-time nature of the service. Even minimal quality issues can be incredibly disruptive. As a result, every effort must be made to ensure the network is “clean” and ready to support live IP-communications sessions. This requires a concerted effort by the network team to test/characterize and pre-qualify the network as videoconferencing ready. It also means finding ways to recognize problems as they happen. Efforts to identify and troubleshoot performance quality issues will also require the ability to reconstruct and study incidents in detail.

QoS: It’s Not an Option

Videoconferencing services generate a significant amount of traffic This means that network Quality of Service (QoS) class definitions and bandwidth allocations must be evaluated. Allocating proper bandwidth to this application with increased presidence also raises the likelihood of contention among other applications for remaining network resources.

Configuring Monitoring Metrics

IT teams need to set Key Performance Indicators (KPIs) for videoconferencing quality. Typically latency, packet loss, and jitter are used as indicators of the network’s ability to support quality video. Specific to videoconferencing are metrics designed to reflect aggregate audio/video experiential quality, such as Video MOS (V-MOS). While not based on an industry standard (as is MOS, used with VoIP monitoring), it can be of great value if applied consistently to video traffic.

Keys to Monitoring Video Health

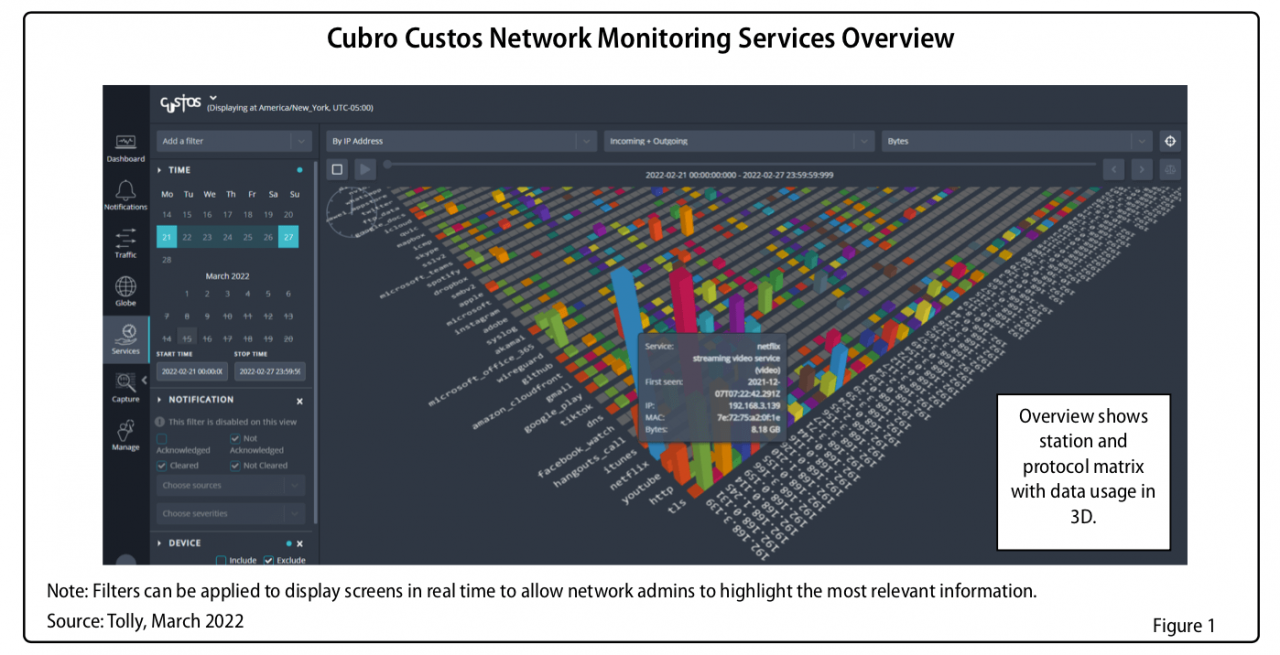

When monitoring videoconference performance, there are several options ranging from video-vendor provided tools to utilizing a comprehensive performance monitoring solutions. The following are six key attributes you’ll want to consider to ensure your team is able to manage overall video health.

| Monitoring Feature | Benefit |

| Comprehensive expert video analytics | Critical for immediate problem recognition and resolution. Provides fast and definitive views of VoIP and videoconferencing control protocols and session quality issues, plus IPTV analytics to quantify streaming video health. |

| Multi-vendor support | While initial deployments are typically single vendor, long-term organizations tend to roll out a mix of vendor video solutions. Verify the monitoring system accommodates multiple manufacturers. |

| Video traffic in context | Viewing video traffic alongside all other IP traffic is key to assessing the impact of other applications and ensuring quick and accurate problem resolution. |

| Long-term capture and storage | Vital for reconstructing video sessions and reviewing problems in detail. Ensures that both communication control and content traffic can be inspected. Be sure the solution can capture up to 10 Gb network speeds, so you can count on having all the packets. |

| Integrated video infrastructure monitoring | Collect and correlate underlying videoconferencing system components alongside system health metrics. Ensure support for popular vendors including Microsoft®, Cisco®, and Avaya®. |

| Aggregated and in-depth reporting | Measure, track, and generate reports on VoIP and video MOS to validate session quality, as well as in-context views to reveal environmental factors and application contention that may be causing problems. |

By preparing the network environment, evaluating QoS policies, and having comprehensive videoconferencing monitoring solutions in place, you can feel confident in your ability to meet user expectations with smooth video calls.