In the landscape of Enterprise Network Management most products (and IT Professionals) tend to focus on “traditional” IT monitoring. By that I mean the monitoring of devices, servers, and applications for performance issues and faults. That makes sense because most networks evolve in a similar fashion. They are first built out to accommodate the needs of the business. This primarily involves supporting access for people to applications they need to do their jobs. Once the initial buildout is done (or at least slows down) then next phase is typically implementing a monitoring solution to notify the service desk when there are problems. This pattern of growth, implementation, and monitoring continues essentially forever until the business itself changes through an acquisition or (unfortunately) a shutdown.

However, when a business reaches a certain size, there are a number of new considerations that come into play in order to effectively manage the network. The key word here is “manage” as opposed to “monitor”. These are different concepts, and the distinction is important. While monitoring is primarily concerned with the ongoing surveillance of the network for problems (think alarms that result in a service desk incident) – Network Management is processes, procedures, and policies that govern access to devices and change of the devices.

What is NCCM?

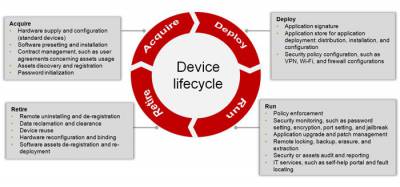

Commonly known by the acronym NCCM which stands for Network Configuration and Change Management – NCCM is the “third leg” of IT management with includes the traditional Performance and Fault Management (PM and FM). The focus of NCCM is to ensure that as network systems move through their common lifecycle (see figure 1 below) there are policies and procedures in place that ensure proper governance of what happens to them.

Figure 1. Network Device Lifecycle

Source: huawei.com

NCCM therefore is focused on the devices itself as an asset of the organization, and then how that asset is provisioned, deployed, configured, changed, upgraded, moved, and ultimately retired. Along each step of the way there should be controls put in place as to Who can access the device (including other devices), How they can access it, What they can do to it (with and without approval) and so on. All NCCM systems should also incorporate logging and auditing so that managers can review what happened in case of a problem later.

These controls are becoming more and more important in today’s modern networks. Depending on which research you read, between 60% and 90% of all unplanned network downtime can be attributed to a mistake made by an engineer when reconfiguring a device. Despite many organization having strict written policies about when a change can be made to a device, the fact remains that many network engineers can and will log into a production device during working hours and make on-the-fly changes. Of course, no engineer willfully brings down a core device. They believe the change they are making is both necessary and non-invasive. But as the saying goes “The road to (you know where) is paved with good intentions”.

A correctly implemented NCCM system can therefore mitigate the majority of these unintended problems. By strictly controlling access to devices and forcing all changes to devices to be both scheduled and approved, an NCCM platform can be a lifesaver. Additionally, most NCCM applications use some form of automation to accomplish repetitive tasks which are another common source of device misconfigurations. For example, instead of a human being making the same ACL change to 300 firewalls (and probably making at least 2-3 mistakes) the NCCM software can perform that task the same way, over and over, without error (and in much less time).

As NCCM is more of a general class of products and not an exact standard, there are many additional potential features and benefits of NCCM tools. Many of them can also perform the initial Discovery and Inventory of the network device estate. This provides a useful baseline of “what we have” which can be a critical component of both NCCM and Performance and Fault Management.

Most NCCM tools should also be able to perform a scheduled backup of device configurations. These backups are the foundation for many aspects of NCCM including historical change reporting, device recovery through rollback options, and policy checking against known good configurations or corporate security and access policies.

Lastly, understanding of the vendor lifecycle for your devices such as End-of-Life and End-of-Support is another critical component of advanced NCCM products. Future blog posts will explore each of these functions in more detail.

The benefits of leveraging configuration management solutions reach into every aspect of IT.

Configuration management solutions also enable organizations to:

- Maximize the return on network investments by 20%

- Reduce the Total Cost of Ownership by 25%

- Reduce the Mean Time to Repair by 20%

- Reduce Overexpansion of Bandwidth by 20%

Because of these operational benefits, NCCM systems have become a critical component of enterprise network management platforms.

Thanks to NMSaaS for the article.

The ‘Dark Side’

The ‘Dark Side’

Join Jason Farrer, Sales Engineer with

Join Jason Farrer, Sales Engineer with