Maximizing the Value and Resiliency of Your Deployed Enterprise Security Solution with Intelligent Load Balancing

Correctly implementing your security solution in the presence of complex, high-volume user traffic has always been a difficult challenge for network architects. The data in transit on your network originates from many places and fluctuates with respect to data rates, complexity, and the occurrence of malicious events. Internal users create vastly different network traffic than external users using your publically available resources. Synthetic network traffic from bots has exceeded real users as the most prevalent creators of network traffic on the internet . How do you maximize your investment in a security solution while gaining the most value from the deployed solution? The answer is intelligent deployment through realistic preparation.

Let’s say that you have more than one point of ingress and egress into your network, and predicting traffic loads it is very difficult (since your employees and customers are global). Do you simply throw money at the problem by purchasing multiple instances of expensive network security infrastructure that could sit idle at times and then get saturated during others? A massive influx of user traffic could overwhelm your security solution in one rack, causing security policies to not be enforced, while the solution at the other point of ingress has resources to spare.

High speed inline security devices are not just expensive—the more features you enable on them the less network traffic they can successfully parse. If you start turning on features like sandboxing (which spawns virtual machines to deeply analyze potential new security events) you can really feel the pain.

Using a network packet broker with load balancing capability with multiple inline Next Generation Firewalls (NGFW) into a single logical solution, allows you to maximize your secruity investment. To test the effectiveness we ran 4 scenerio’s using an advanced featured packet broker and load testing tools to see how effective this strategy is.

TESTING PLATFORM

Usung two high end NGFWs, we enabled nearly every feature (including scanning traffic for attacks, identifying user applications, and classifying network security risk based on the geolocation of the client) and load balanced the two devices using an advanced featured packet broker. Then using our load testing tools we created all of my real users and a deluge of different attack scenarios. Below are the results of 4 testing scenerios

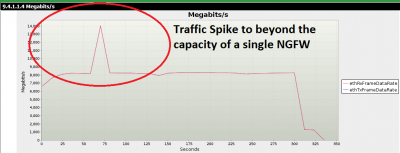

Scenario One: Traffic Spikes

Your 10GbE NGFW will experience inconsistent amounts of network traffic. It is crucial to be able effectively inforce security policies during such events. In the first test I created a baseline of 8Gbps of real user traffic, then introduced a large influx of traffic that pushed the overall volume to 14Gbps. The packet broker load balancer ensured that the traffic was split between the two NGFWs evenly, and all of my security policies were enforced.

Figure 1: Network traffic spike

Scenario Two: Endurance Testing

Handling an isolated event is interesting, but maintaining security effectiveness over long periods of time is crucial for a deployed security solution. In the next scenario, I ran all of the applications I anticipated on my network at 11Gbps for 60 hours. The packet broker gave each of my NGFWs just over 5Gbps of traffic, allowing all of my policies to be enforced. Of the 625 million application transactions attempted throughout the duration of the test, users enjoyed a 99.979% success rate.

Figure 2: Applications executed during 60 hour endurance test

Scenario Three: Attack Traffic

Where the rubber meets the road for a security solution is during an attack. Security solutions are insurance policies against network failure, data exfiltration, misuse of your resources, and loss of reputation. I created a 10Gbps baseline of the user traffic (described in Figure 2) and added a curveball by launching 7261 remote exploits from one zone to another. Had these events not been load balanced with the packet broker, a single NGFW might have experienced the entire brunt of this attack. The NGFW could have been overwhelmed and failed to inforce policies. The NGFW might have been under such duress mitigating the attacks that legitimate users would have been collateral damage of the NGFW attempting to inforce policies. The deployed solution performed excellently, mitigating all but 152 of my attacks.

Concerning the missed 152 attacks: the load testing tool library contains a comprehensive amount of undisclosed exploits. That being said, as with the 99.979% application success rate experienced during the endurance test, nothing is infallible. If my test worked with 100% success, I wouldn’t believe it and neither should you.

Figure 3: Attack success rate

Scenario Four: The Kitchen Sink

Life would indeed be rosy if the totality of a content aware security solution was simply making decisions between legitimate users and known exploits. For my final test I added another wrinkle. The solution also had to deal with large volume of fuzzing to my existing deluge of real users and attacks. Fuzzing is the concept of sending intentionally flawed network traffic through a device or at an endpoint with the hopes of uncovering a bug that could lead to a successful exploitation. Fuzzed traffic can be as simple as incorrectly advertised packet lengths, to erroneously crafted application transactions. My test included those two scenarios and everything in between. The goal of this test was stability. I achieved this by mixing 400Mbps of pure chaos via load testing fuzzing engine, with Scenario Three’s 10Gbps of real user traffic and exploits. I wanted to make certain that my load-balanced pair of NGFWs were not going to topple over when the unexpected took place.

The results were also exceptionally good. Of the 804 million application transactions my users attempted, I only had 4.5 million go awry—leaving me with a 99.436% success rate. This extra measure of maliciousness only changed the user experience by increasing the failures by about ½ of a percent. Nothing crashed and burned.

Figure 4: Application Success rates during the “Kitchen Sink” test

Conclusion

All four of the above scenarios illustrate how you can enhance the effectiveness of a security solution while maximizing your budget. However, we are only scratching the surface. What if you needed your security solution to be deployed in a High Availability environment? What if the traffic your network services expand? Setting up the packet broker to operate in HA or adding additional inline security solutions to be load balanced is probably the most effective and affordable way of addressing these issues.

Let us know if you are intrested in seeing a live demonstration of a packet broker load balancing attacks from secruity testing tool over multiple inline security solutions? We would be happy to show you how it is done.

Additional Resources:

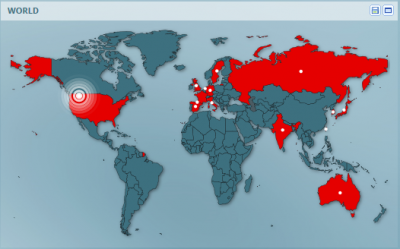

In short, application intelligence is basically the real-time visualization of application level data. This includes the dynamic identification of known and unknown applications on the network, application traffic and bandwidth use, detailed breakdowns of applications in use by application type, and geo-locations of users and devices while accessing applications.

In short, application intelligence is basically the real-time visualization of application level data. This includes the dynamic identification of known and unknown applications on the network, application traffic and bandwidth use, detailed breakdowns of applications in use by application type, and geo-locations of users and devices while accessing applications.