When chasing security or performance issues in a data center, the last thing you need is packet loss in your visibility fabric. In this blog post I will focus on the importance of how to deal with multiple tools with different but overlapping needs.

Dealing with overlapping filters is critical, in both small and large visibility fabrics. Lost packets occur when filter overlaps are not properly considered. Ixia’s NTO is the only visibility platform that dynamically deals with all overlaps to ensure that you never miss a packet. Ixia Dynamic Filters ensure complete visibility to all your tools all the time by properly dealing with “overlapping filters.” Ixia has over 7 years invested in developing and refining the filtering architecture of NTO, it’s important to understand the problem of overlapping filters.

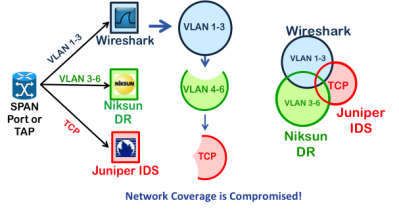

What are “overlapping filters” I hear you ask? This is easiest explained with a simple example. Let’s say we have 1 SPAN port, 3 tools, and each tool needs to see a subset of traffic:

Sounds simple, we just want to describe 3 filter rules:

- Tool 1 wants a copy of all packets on VLAN 1-3

- Tool 2 wants a copy of all packets containing TCP

- Tool 3 wants a copy of all packets on VLAN 3-6

Notice the overlaps. For example a TCP packet on VLAN 3 should go to all three tools. If we just installed these three rules we would miss some traffic because of the overlaps. This is because once a packet matches a rule the hardware takes the forwarding action and moves on to examine the next packet.

This is what happens to the traffic when overlaps are ignored. Notice that while the WireShark tool gets all of its traffic because its rule was first in the list, the NikSun and Juniper tools will miss some packets. The Juniper IDS will not see any of the traffic on VLANs 1-6, and the Niksun will not receive packets on VLAN 3. This is bad.

To solve this we need to describe all the overlaps and put them in the right order. This ensures each tool gets a full view of the traffic. The three overlapping filters above result in seven unique rules as shown below. By installing these rules in the right order, each tool will receive a copy of every relevant packet. Notice we describe the overlaps first as the highest priority.

Sounds simple but remember this was a very simple example. Typically there are many more filters, lots of traffic sources, multiple tools, and multiple users of the visibility fabric. As well changes need to happen on the fly easily and quickly without impacting other tools and users.

A simple rule list quickly explodes into thousands of discrete rules. Below you can see two tools and three filters with ranges that can easily result in 1300 prioritized rules. Not something a NetOps engineer needs to deal with when trying to debug an outage at 3am!

Consider a typical visibility fabric with 50 taps, eight tools, and one operations department with three users. Each user needs to not impact the traffic of other users, and each user needs to be able to quickly select the types of traffic they need to secure and optimize in the network.

Consider a typical visibility fabric with 50 taps, eight tools, and one operations department with three users. Each user needs to not impact the traffic of other users, and each user needs to be able to quickly select the types of traffic they need to secure and optimize in the network.

With traditional rules-based filtering this becomes impossible to manage.

Ixia NTO is the only packet broker that implements Dynamic Filters; other visibility solutions implement rules with a priority. This is the result of many years of investment in filtering algorithms. Here’s the difference:

- Ixia Dynamic Filters are a simple description of the traffic you want, without any nuance of the machine that selects the traffic for you, other filter interactions, or the complications brought by overlaps.

- Priority-based rules are lower level building blocks of filters. Rules require the user to understand and account for overlaps and rule priority to select the right traffic. Discrete rules quickly become headaches for the operator.

Ixia Dynamic Filters remove all the complexity by creating discrete rules under the hood, and a filter may require many discrete rules. The complex mathematics required to determine discrete rules and priority are calculated in seconds by software, instead of taking days of human work. Ixia invented the Dynamic filter more than seven years ago, and has been refining and improving it ever since. Dynamic Filtering software allows us to take into account the most complex filtering scenarios in a very simple and easy-to-manage way.

Another cool thing about Ixia Dynamic filter software is that it becomes the underpinnings for an integrated drag and drop GUI and REST API. Multiple users and automation tools can simultaneously interact with the visibility fabric without fear of impacting each other.

Some important characteristics of Ixia’s Dynamic Filtering architecture:

NTO Dynamic Filters handle overlaps automatically—No need to have a PhD to define the right set of overlapping rules.

NTO Dynamic Filters have unlimited bandwidth—Many ports can aggregate to a single NTO filter which can feed multiple tools, there will be no congestion or dropped packets.

NTO Dynamic Filters can be distributed—Filters can span across ports, line cards and distributed nodes without impact to bandwidth or congestion.

NTO allows a Network Port to connect to multiple filters—You can do this:

NTO has 3 stage filtering—Additional filters at the network and tool ports.

NTO filters allow multiple criteria to be combined using powerful boolean logic—Users can pack a lot of logic into a single filter. Each stage supports Pass and Deny AND/OR filters with ‘Source or Destination’, session, and multi-part uni/bi-directional flow options. Dynamic filters also support passing any packets that didn’t match any other Pass filter, or that matched all Deny filters.

NTO Custom Dynamic Filters cope with offsets intelligently—filter from End of L2 or start of L4 Payload skipping over any variable length headers or tunnels. Important for dealing with GTP, MPLS, IPv6 header extensions, TCP options, etc.

NTO Custom Dynamic Filters handle tunneled MPLS and GTP L3/L4 fields at line rate on any port—use pre-defined custom offset fields to filter on MPLS labels, GTP TEIDs, and inner MPLS/GTP IP addresses and L4 ports on any standard network port interface.

NTO provides comprehensive statistics at all three filter stages—statistics are so comprehensive you can often troubleshoot your network based on the data from Dynamic filters alone. NTO displays packet/byte counts at the input and output of each filter along with rates, peak, and charts. The Tool Management View provides a detailed breakdown of the packets/bytes being fed into a tool port by its connected network ports and dynamic filters.

In summary the key benefits you get with Ixia Dynamic filters are:

- Accurately calculates required rules for overlapping filters, 100% of the time.

- Reduces time taken to correctly configure rules from days to seconds.

- Removes human error when trying to get the right traffic to the right tool.

- Hitless filter installation, doesn’t drop a single packet when filters are installed or adjusted

- Easily supports multiple users and automation tools manipulating filters without impacting each other

- Fully automatable via a REST API, with no impact on GUI users.

- Robust and reliable delivery of traffic to security and performance management tools.

- Unlimited bandwidth, since dynamic filters are implemented in the core of the ASIC and not on the network or tool port.

- Significantly less skill required to manage filters, no need for a PhD.

- Low training investment, managing the visibility fabric is intuitive.

- More time to focus on Security Resilience and Application Performance

Additional Resources:

Ixia Visibility Architecture

Thanks to Ixia for the article.

Consider a typical visibility fabric with 50 taps, eight tools, and one operations department with three users. Each user needs to not impact the traffic of other users, and each user needs to be able to quickly select the types of traffic they need to secure and optimize in the network.

Consider a typical visibility fabric with 50 taps, eight tools, and one operations department with three users. Each user needs to not impact the traffic of other users, and each user needs to be able to quickly select the types of traffic they need to secure and optimize in the network.