The Growing Impact of Social Networking Trends on Lawful Interception

Lawful Interception (LI) is the legal process by which a communications network operator or Service Provider (SP) gives authorized officials access to the communications of individuals or organizations. With security threats mushrooming in new directions, LI is more than ever a priority and major focus of Law Enforcement Agencies (LEAs). Regulations such as the Communications Assistance for Law Enforcement Act (CALEA), mandate that SPs place their resources at the service of these agencies to support surveillance and interdiction of individuals or groups.

CALEA makes Lawful Interception a priority mission for Service Providers as well as LEA; its requirements make unique demands and mandate specific equipment to carry out its high-stakes activities. This paper explores requirements and new solutions for Service Provider networks in performing Lawful Interception.

A Fast-Changing Environment Opens New Doors to Terrorism and Crime

In the past, Lawful Interception was simpler and more straightforward because it was confined to traditional voice traffic. Even in the earlier days of the Internet, it was still possible to intercept a target’s communication data fairly easily.

Now, as electronic communications take on new forms and broaden to a potential audience of billions, data volumes are soaring, and the array of service offerings is growing apace. Lawful Interception Agencies and Service Providers are racing to thwart terrorists and other criminals who have the technological expertise and determination to carry out their agendas and evade capture. This challenge will only intensify with the rising momentum of change in communication patterns.

Traffic patterns have changed: In the past it was easier to identify peer-to-peer applications or chat using well known port numbers. In order to evade LI systems, the bad guys had to work harder. Nowadays, most applications use Ixia Taps into Visibility, Access and Security in 4G/LTE standard HTTP and in most cases SSL to communicate. This puts an extra burden on LI systems that must identify overall more targets on larger volumes of data with fewer filtering options.

Social Networking in particular is pushing usage to exponential levels, and today’s lawbreakers have a growing range of sophisticated, encrypted communication channels to exploit. With the stakes so much higher, Service Providers need robust, innovative resources that can contend with a widening field of threats. This interception technology must be able to collect volume traffic and handle data at unprecedented high speeds and with pinpoint security and reliability.

LI Strategies and Goals May Vary, but Requirements Remain Consistent

Today, some countries are using nationwide interception systems while others only dictate policies that providers need to follow. While regulations and requirements vary from country to country, organizations such as the European Telecommunications Standards Institute (ETSI) and the American National Standards Institute (ANSI) have developed technical parameters for LI to facilitate the work of LEAs. The main functions of any LI solution are to access Interception-Related Information (IRI) and Content of Communication (CC) from the telecommunications network and to deliver that information in a standardized format via the handover interface to one or more monitoring centers of law enforcement agencies.

High-performance switching capabilities, such as those offered by the Ixia Director™ family of solutions, should map to following LI standards in order to be effective: They must be able to isolate suspicious voice, video, or data streams for an interception, based on IP address, MAC address or other parameters. The device must also be able to carry out filtering at wire speed. Requirements for supporting Lawful Interception activities include:

- The ability to intercept all applicable communications of a certain target without gaps in coverage, including dropped packets, where missing encrypted characters may render a message unreadable or incomplete

- Total visibility into network traffic at any point in the communication stream

- Adequate processing speed to match network bandwidth

- Undetectability, unobtrusiveness, and lack of performance degradation (a red flag to criminals and terrorists on alert for signs that they have been intercepted)

- Real-time monitoring capabilities, because time is of the essence in preventing a crime or attack and in gathering evidence

- The ability to provide intercepted information to the authorities in the agreed-upon handoff format

- Load sharing and balancing of traffic that is handed to the LI system .

From the perspective of the network operator or Service Provider, the primary obligations and requirements for developing and deploying a lawful interception solution include:

- Cost-effectiveness

- Minimal impact on network infrastructure

- Compatibility and compliance

- Support for future technologies

- Reliability and security

Ixia’s Comprehensive Range of Solutions for Lawful Interception

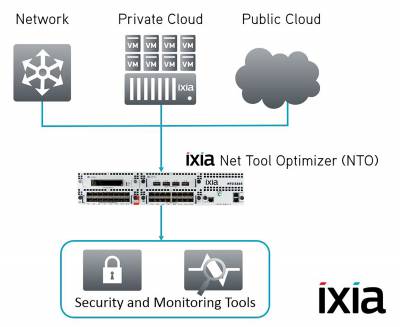

This Ixia customer, (the “Service Provider”), is a 4G/LTE pioneer that relies on Ixia solutions. Ixia serves the LI architecture by providing the access part of an LI solution in the form of Taps and switches. These contribute functional flexibility and can be configured as needed in many settings. Both the Ixia Director solution family and the iLink Agg™ solution can aggregate a group of links in traffic and pick out conversations with the same IP address pair from any of the links.

Following are further examples of Ixia products that can form a vital element of a successful LI initiative:

Test access ports, or Taps, are devices used by carriers and others to meet the capability requirements of CALEA legislation. Ixia is a global leader in the range and capabilities of its Taps, which provide permanent, passive access points to the physical stream.

Ixia Taps reside in both carrier and enterprise infrastructures to perform network monitoring and to improve both network security and efficiency. These inline devices provide permanent, passive access points to the physical stream. The passive characteristic of Taps means that network data is not affected whether the Tap is powered or not. As part of an LI solution, Taps have proven more useful than Span ports. If Law Enforcement Agencies must reconfigure a switch to send the right conversations to the Span port every time intercept is required, a risk arises of misconfiguring the switch and connections. Also, Span ports drop packets—another significant monitoring risk, particularly in encryption.

Director xStream™ and iLink Agg xStream™ enable deployment of an intelligent, flexible and efficient monitoring access platform for 10G networks. Director xStream’s unique TapFlow™ filtering technology enables LI to focus on select traffic of interest for each tool based on protocols, IP addresses, ports, and VLANs. The robust engineering of Director xStream and iLink Agg xStream enables a pool of 10G and 1G tools to be deployed across a large number of 10G network links, with remote, centralized control of exactly which traffic streams are directed to each tool. Ixia xStream solutions enable law enforcement entities to view more traffic with fewer monitoring tools as well as relieving oversubscribed 10G monitoring tools. In addition, law enforcement entities can share tools and data access among groups without contention and centralize data monitoring in a network operations center.

Director Pro™ and Director xStream Pro data monitoring switches offers law enforcement the ability to perform better pre-filtering via Deep Packet Inspection (DPI) and to hone in on a specific phone number or credit card number. Those products differs from other platforms that might have the ability to seek data within portions of the packet thanks to a unique ability to filter content or perform pattern matching with hardware and in wire speed potentially to Layer 7. Such DPI provides the ability to apply filters to a packet or multiple packets at any location, regardless of packet length or how “deep” the packet is; or to the location of the data to be matched within this packet. A DPI system is totally independent of the packet.

Thanks to Ixia for the article.

Thanks to NetFlow Auditor for the article.

Thanks to NetFlow Auditor for the article.

Join Jason Farrer, Sales Engineer with

Join Jason Farrer, Sales Engineer with