Blind spots are a long-established threat to virtual data centers. They are inherent to virtual machine (VM) usage and technology due to the nature of VMs, lack of visibility for inter- and intra-VM data monitoring, the typical practices around the use of VM’s, and the use of multiple hypervisors in enterprise environments.

Virtual machines by their nature hide inter- and intra-VM traffic. This is because the traffic stays within in a very small geographic area. As I mentioned in a previous blog, Do You Really Know What’s Lurking in Your Data Center?, Gartner Research found that 80% of VM traffic never reaches the top of the rack where it can be captured by traditional monitoring technology. This means that if something is happening to that 80% of your data (security threat, performance issue, compliance issue, etc.), you’ll never know about it. This is a huge area of risk.

In addition, an Ixia conducted market survey on virtualization technology released in March 2015, exposed a high propensity for data center blind spots to exist due to typical data center practices. This report showed that there was probably hidden data, i.e. blind spots, existing on typical enterprise data networks due to inconsistent monitoring practices, lack of monitoring practices altogether in several cases, and the typical lack of one central group responsible for collecting monitoring data.

For instance, only 37% of the respondents were monitoring their virtualized environment with the same processes that they use in their physical data center environments, and what monitoring was done usually used less capabilities in the virtual environment. This means that there is a potential for key monitoring information to NOT be captured for the virtual environment, which could lead to security, performance, and compliance issues for the business. In addition, only 22% of business designated the same staff to be responsible for monitoring and managing their physical and virtual technology monitoring. Different groups being responsible for monitoring practices and capabilities often leads to inconsistencies in data collection and execution of company processes.

The survey further revealed that only 42% of businesses monitor the personally identifiable information (PII) transmitted and stored on their networks. At the same time, 2/3 of the respondents were running critical applications across within their virtual environment. Mixed together, these “typical practices” should definitely raise warning signs for IT management.

Additional research by firms like IDC and Gartner are exposing another set of risks for enterprises around the use of multiple hypervisors in the data center. For instance, the IDC Virtualization and the Cloud 2013 study found that 16% of customers had already deployed or were planning to deploy more than one hypervisor. Another 45% were open to the idea in the future. In September 2014, another IDC market analysis stated that now over half of the enterprises (51%) have more than one type of hypervisor installed. Gartner ran a poll in July 2014 that also corroborated that multiple hypervisors were being used in enterprises.

This trend is positive, as having a second hypervisor is a good strategy for an enterprise. Multiple hypervisors allow you to:

- Negotiate pricing discounts by simply having multiple suppliers

- Help address corporate multi-vendor sourcing initiatives

- Provide improved business continuity scenarios for product centric security threats

But it is also very troubling, because the cons include:

- Extra expenses for the set-up of a multi-vendor environment

- Poor to no visibility into a multi-hypervisor environment

- An increase in general complexity (particularly management and programming)

- And further complexities if you have advanced data center initiatives (like automation and orchestration)

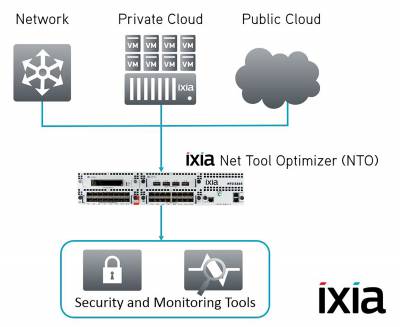

One of the primary concerns is lack of visibility. With a proper visibility strategy, the other cons of a multi-hypervisor environment can be either partially or completely mitigated. One way to accomplish this goal is to deploy a virtual tap that includes filtering capability. The virtual tap allows you the access to all the data you need. This data can be forwarded on to a packet broker for distribution of the information to the right tool(s). Built-in filtering capability is an important feature of the virtual tap so that you can limit costs and bandwidth requirements.

Blind spots that can create the following issues:

- Hidden security issues

- Inadequate access to data for trending

- Inadequate data to demonstrate proper regulatory compliance policy tracking

Virtual taps (like the Ixia Phantom vTap) address blind spots and their inherent dangers.

If the virtual tap is integrated into a holistic visibility approach using a Visibility Architecture, you can streamline your monitoring costs because instead of having two separate monitoring architectures with potentially duplicate equipment (and duplicate costs), you have one architecture that maximizes the efficiency of all your current tools, as well any future investments. When installing the virtual tap, the key is to make sure that it installs into the Hypervisor without adversely affecting the Hypervisor. Once this is accomplished, the virtual tap will have the proper access to inter and intra-VMs that it needs, as well as the ability to efficiently export that information. After this, the virtual tap will need a filtering mechanism so that exported data can be “properly” limited so as not to overload the LAN/WAN infrastructure. The last thing you want to do is to cause any performance problems to your network. Details on these concepts and best practices are available in the whitepapers Illuminating Data Center Blind Spots and Creating A Visibility Architecture.

As mentioned earlier, a multi-hypervisor environment is now a fact for the enterprise. The Ixia Phantom Tap supports multiple hypervisors and has been optimized for VMware ESX and kernel virtual machine (KVM) environments. KVM is starting to make a big push into the enterprise environment. It has been part of the Linux kernel since 2007. According to IDC, shipments of the KVM license were around 5.2 million units in 2014 and they expect that number to increase to 7.2 million by 2017. A lot of the KVM ecosystem is organized by the Open Virtual Alliance and the Phantom vTap supports this recommendation.

To learn more, please visit the Ixia Phantom vTap product page, the Ixia State of Virtualization for Visibility Architectures 2015 report or contact us to see a Phantom vTap demo!

Additional Resources:

Ixia Phantom vTap

Ixia State of Virtualization for Visibility Architectures 2015 report

White Paper: Illuminating Data Center Blind Spots

White Paper: Creating A Visibility Architecture

Blog: Do You Really Know What’s Lurking in Your Data Center?

Solution Focus Category

Network Visibility

Thanks to Ixia for the article.