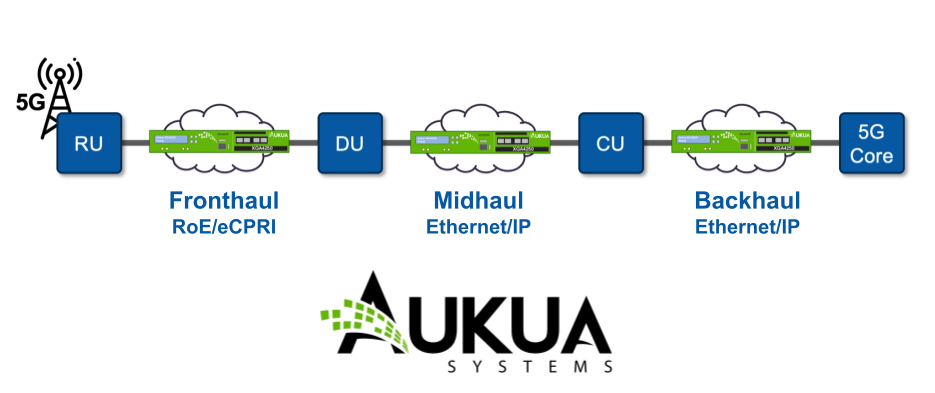

In the world of 5G and Open RAN (O-RAN), “good enough” testing simply doesn’t cut it. As networks disaggregate into Radio Units (RU), Distributed Units (DU), and Centralized Units (CU), the margins for error shrink to microseconds. The fronthaul interface is unforgiving, and interoperability between vendors is never guaranteed.

This is where Aukua Systems distinguishes itself. Unlike traditional, bloated test equipment, Aukua offers a nimble, hardware-based “3-in-1” architecture that combines a Network Impairment Emulator, Traffic Generator, and Inline Protocol Analyzer.

At Telnet Networks, we rely on Aukua to help our customers move from the lab to the live edge with confidence. Here is how Aukua’s XGA4250 platform is solving the specific challenges of O-RAN.

The Aukua Advantage: 3-in-1 Capability

Aukua’s core value proposition is versatility without sacrificing precision. In a single 1U chassis, engineers get three distinct tools required for O-RAN validation:

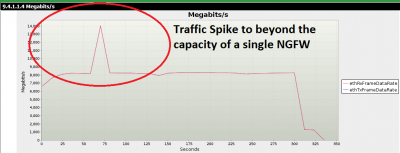

- Traffic Generation: To stress-test throughput and load.

- Impairment Emulation: To inject real-world chaos (delay, jitter, drops) to see if the network survives.

- Inline Capture & Analysis: To see exactly what is happening on the wire with nanosecond precision.

The XGA4250 for High-Speed Fronthaul & Midhaul

The XGA4250 is the industry workhorse for 25GbE O-RAN testing. It is specifically designed to handle the strict latency requirements of the 5G fronthaul and the buffering challenges of the midhaul.

Key 5G/O-RAN Use Cases:

- Fronthaul Latency Validation (eCPRI/RoE): The link between the RU and DU is highly sensitive. The XGA4250 can emulate sub-millisecond delays with nanosecond precision, allowing you to verify that your fronthaul transport (e.g., eCPRI) meets the strict timing budget required by the O-RAN Alliance.

- Midhaul Stress Testing: For the DU-to-CU link (Midhaul), typically running at 25GbE, the XGA4250 can emulate delays up to 20ms or more. This is critical for testing how the CU handles buffering and retransmissions when the DU is located miles away.

- Protocol-Aware Impairment: The XGA4250 doesn’t just delay everything blindly. Its classifier technology allows you to target specific protocols—for example, you can delay eCPRI user-plane packets while letting PTP (Precision Time Protocol) and SyncE traffic pass through untouched. This ensures you are testing the application layer without breaking the network’s synchronization.

Download the Aukua 5G / O-RAN Solution Brief

Why the “Inline” Approach Matters

One of the biggest headaches in O-RAN is “finger-pointing” between vendors. When the DU and RU aren’t talking, who is at fault?

Aukua’s Inline Capture capability solves this. By sitting transparently between the network elements, the XGA and MGA platforms can capture traffic at line rate without disturbing the link.

- Nanosecond Visibility: You can see exactly when a packet left the DU and when it arrived at the RU.

- Layer 1 PCS Capture: Aukua goes deeper than standard tools, allowing you to capture Layer 1 Physical Coding Sublayer (PCS) bits. This is often where obscure interoperability issues hide, such as symbol errors that standard packet sniffers miss.

Get the 5G O-RAN Case Study

Ready to see the Aukua difference in your lab? Contact Telnet Networks today to schedule a demo of the XGA4250.