I was recently reading an article in TechCrunch titled “The Problem With The Internet Of Things,” where the author lamented how bad design or rollout of good ideas can kill promising markets. In his example, he discussed how turning on the lights in a room, through the Internet of Things (IoT), became a five step process rather than the simple one step process we currently use (the light switch).

This illustrates the problem between the grand idea, and the practicality of the market: it’s awesome to contemplate a future where exciting technology impacts our lives, but only if the realities of everyday use are taken into account. As he effectively state, “Smart home technology should work with the existing interfaces of households objects, not try to change how we use them.”

Part of the problem is that the IoT is still just a nebulous concept. Its everyday implications haven’t been worked out. What does it mean when all of our appliances, communications, and transportation are connected? How will they work together? How will we control and manage them? Details about how the users of exciting technology will actually participate in the experience is the actual driver of technology success. And too often, this aspect is glossed over or ignored.

And, once everything is connected, will those connections be a door for malware or hacktivists to bypass security?

Part of the solution to getting new technology to customers in a meaningful way, that is both a quality end user experience AND a profitable model for the provider, is network validation and optimization. Application performance and security resilience are key when rolling out, providing, integrating or securing new technology.

What do we mean by these terms? Well:

- Application performance means we enable successful deployments of applications across our customers’ networks

- Security resilience means we make sure customer networks are resilient to the growing security threats across the IT landscape

Companies deploying applications and network services—in a physical, virtual, or hybrid network configuration—need to do three things well:

- Validate. Customers need to validate their network architecture to ensure they have a well-designed network, properly provisioned, with the right third party equipment to achieve their business goals.

- Secure. Customers must secure their network performance against all the various threat scenarios—a threat list that grows daily and impacts their end users, brand, and profitability.

(Just over last Thanksgiving weekend, Sony Pictures was hacked and five of its upcoming pictures leaked online—with the prime suspect being North Korea!)

- Optimize. Customers seek network optimization by obtaining solutions that give them 100% visibility into their traffic—eliminating blind spots. They must monitor applications traffic and receive real-time intelligence in order to ensure the network is performing as expected.

Ixia helps customers address these pain points, and achieve their networking goals every day, all over the world. This is the exciting part of our business.

When we discuss solutions with customers, no matter who they are— Bank of America, Visa, Apple, NTT—they all do three things the same way in their networks:

- Design—Envision and plan the network that meets their business needs

- Rollout—Deploy network upgrades or updated functionality

- Operate—Keep the production network seamlessly providing a quality experience

These are the three big lifecycle stages for any network design, application rollout, security solution, or performance design. Achieving these milestones successfully requires three processes:

- Validate—Test and confirm design meets expectations

- Secure— Assess the performance and security in real-world threat scenarios

- Optimize— Scale for performance, visibility, security, and expansion

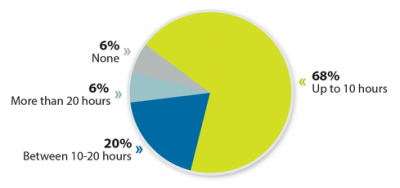

So when it comes to new technology and new applications of that technology, we are in an amazing time—evidenced by the fact that nine billion devices will be connected to the Internet in 2018. Examples of this include Audio Video Bridging, Automotive Ethernet, Bring Your Own Apps (BYOA), etc. Ixia sees only huge potential. Ixia is a first line defense to creating the kind of quality customer experience that ensures satisfaction, brand excellence, and profitability.

Additional Resources:

Article: The Problem With The Internet Of Things

Ixia visibility solutions

Ixia security solutions

Thanks to Ixia for the article.